Hey everyone!

Generating 3D models from images is getting easier as the time goes by.

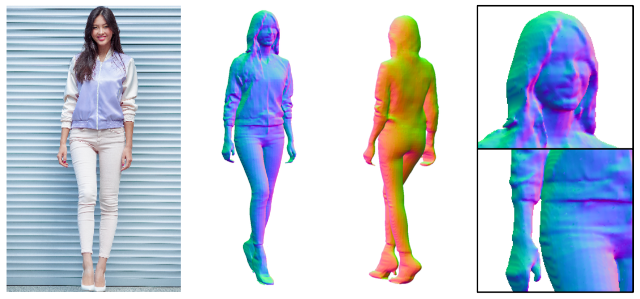

Just came across a project that infers 3D geometry of clothed humans: access project's page.

One can actually try with one's own images by accessing their Google Collab!

Due to the limited variation in the training data, one's results might be broken sometimes. Here are some useful tips to get reasonable results.

- Use high-res image. The model is trained with 1024x1024 images. Use at least 512x512 with fine-details. Low-res images and JPEG artifacts may result in unsatisfactory results.

- Use an image with a single person. If the image contain multiple people, reconstruction quality is likely degraded.

- Front facing with standing works best (or with fashion pose).

- The entire body is covered within the image. (Note: now missing legs is partially supported).

- Make sure the input image is well lit. Exteremy dark or bright image and strong shadow often create artifacts.

- I recommend nearly parallel camera angle to the ground. High camera height may result in distorted legs or high heels.

- If the background is cluttered, use less complex background or try removing it using https://www.remove.bg/ before processing.

- It's trained with human only. Anime characters may not work well.

After, one can bring that into Blender, clean it up (if needed), then Mixamo and animate it, then Unity or Unreal Engine and turn it into a game animated object or digital twin.

Best,

Visibility:

Public - accessible to all site users